Distributed systems form the invisible backbone of the modern digital world—powering cloud platforms, social networks, e-commerce, and virtually every scalable application today. Yet, as Tim Berglund’s memorable talk “Distributed Systems in One Lesson” reveals, the true lesson isn’t about shiny tech or clever algorithms. It’s about a single, stubborn fact: failure is not a special case,failure is the rule.

Embracing the Hard Truth: Failure is Inevitable

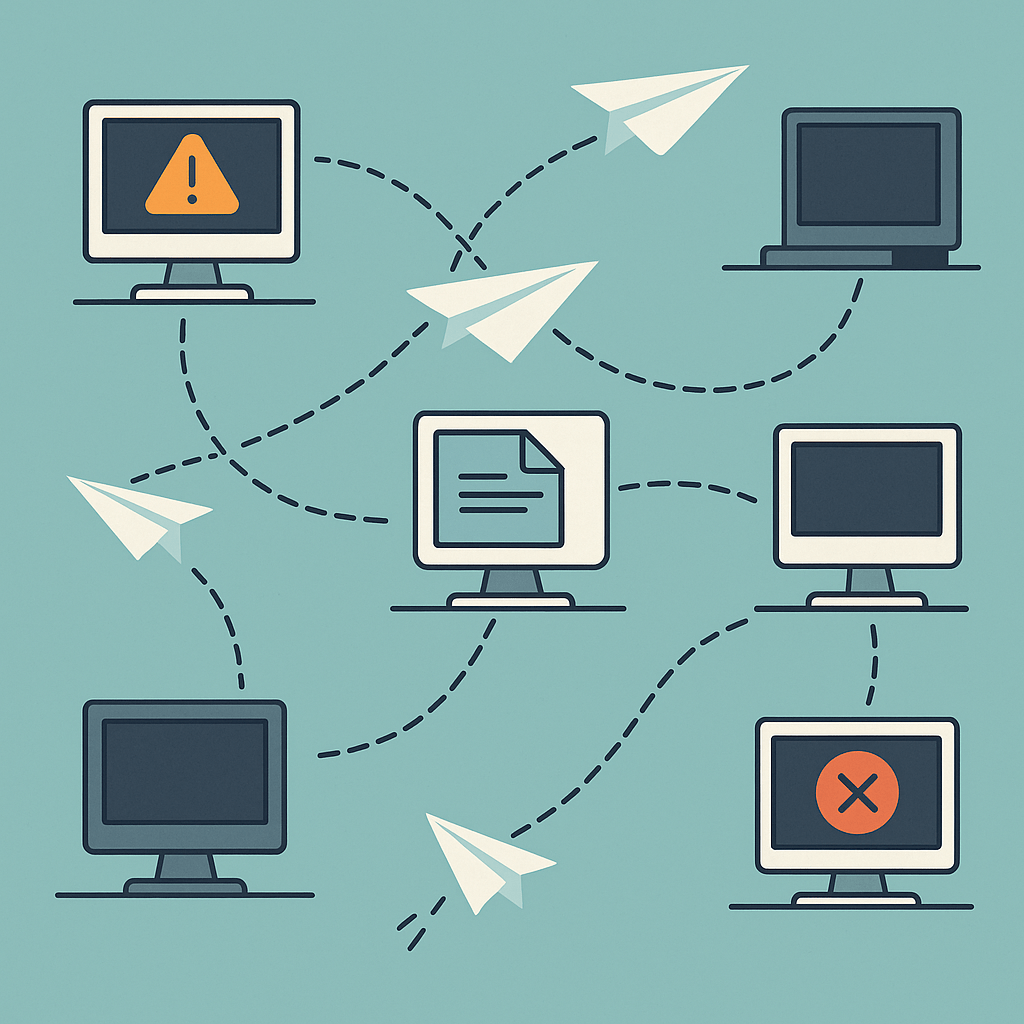

At their core, distributed systems are simply collections of computers working together to solve a problem that no single computer could handle alone. The payoff is scale, speed, and redundancy but the cost is unpredictability. More machines mean more opportunities for things to go wrong: networks split, nodes vanish, messages are lost or delayed.

The one lesson:

“If you want to build robust distributed systems, design as if everything will fail because, sooner or later, it will.”

Key Principles for Building Reliable Distributed Systems

1. Design for Failure from Day One

- Anticipate outages: Don’t assume remote calls will always succeed. Always code with timeouts, retries, and error handling.

- Test with chaos: Use chaos engineering principles simulate network splits, machine failures, and latency spikes to validate resilience.

- Monitor everything: Observability is your safety net log, trace, and alert for failures, slowdowns, and unexpected behaviors.

2. Understand Consistency Models

Distributed data comes with trade-offs. Not all systems guarantee that everyone sees the same data instantly.

- Strong consistency: Everyone sees updates in real time good for banks or ledgers, but can slow things down.

- Eventual consistency: Updates propagate over time great for scale, but temporary data mismatches are possible.

Example: Social media timelines, product recommendations.

Choose your model carefully, depending on business needs.

3. Partitioning and the CAP Theorem

The CAP theorem says: In the presence of a network partition, a system must choose between Consistency and Availability.

- Consistency: All users see the same data, even if some requests fail.

- Availability: The system always responds, even if some responses are stale.

- Partition tolerance: The system keeps working, even if some parts can’t talk to each other.

Real-world implication:

You must tolerate network failures so decide whether to favor consistency or availability in critical moments.

4. Idempotency and Reliable Operations

Retries are part of life in distributed systems. But if the same operation is executed twice, will it break something?

- Idempotent APIs ensure that repeating a request (e.g., charging a customer, creating a record) produces the same effect as doing it once.

- Use unique transaction IDs and status checks to avoid duplicate actions.

5. Resilience Patterns: Circuit Breakers & Bulkheads

- Circuit breakers: Temporarily cut off calls to a failing service, letting it recover and preventing cascading failures.

- Bulkheads: Isolate parts of your system so failures don’t bring down everything.

Practical example:

If your payment gateway goes down, trip a circuit breaker so your website doesn’t freeze for all users degrade gracefully instead.

Actionable Takeaways for Engineers

- Never trust the network: Always handle the case where a message is delayed, dropped, or duplicated.

- Monitor, alert, and recover: Automated systems are only as good as their feedback loops.

- Test for disaster: Inject chaos on purpose to see how your systems cope—and fix what breaks.

Real-World Inspiration

- Netflix’s “Chaos Monkey” deliberately breaks things in production to prove resilience.

- Amazon’s DynamoDB, Google Spanner, and Apache Kafka all bake in eventual consistency, partition tolerance, and failure handling.

The Bottom Line

Distributed systems are the foundation of digital scale but their defining feature is failure, not perfection. If you architect with this truth in mind, you’ll build solutions that don’t just work on a whiteboard they survive, adapt, and thrive in the real world.

Want to learn more?

- Explore concepts like the CAP theorem, consensus algorithms (Paxos, Raft), and modern observability stacks (Prometheus, Grafana).

- Read “Designing Data-Intensive Applications” by Martin Kleppmann for deeper dives.

- Practice building small distributed apps and try breaking them yourself!

Remember:

“Success in distributed systems comes not from avoiding failure, but from expecting and managing it.”

Inspired by Tim Berglund’s “Distributed Systems in One Lesson.” For a deeper dive, watch the full talk here.

Leave a comment